Generative artificial intelligence training encompasses a range of model architectures. This blog unpacks the key models driving the generative AI revolution— Generative Adversarial Networks (GANs), diffusion models, and transformer-based language models—

and explains their strengths, weaknesses, and use cases.

Introduction

The term generative AI refers to algorithms capable of creating new data rather than just making predictions. Under the hood, however, different architectures achieve generation in very different ways.

By understanding these models, researchers, developers, and business leaders can make informed decisions about which approach is best suited for their needs.

Generative Adversarial Networks (GANs)

First proposed by Ian Goodfellow and colleagues in 2014, GANs introduced a new way to train generative models.

A GAN consists of two neural networks:

- Generator – creates data resembling the training set.

- Discriminator – attempts to distinguish between real and synthetic data.

Through an adversarial training process, the generator improves until it produces outputs the discriminator cannot reliably distinguish.

Strengths

- High-quality images – Capable of producing stunningly realistic visuals for photo editing, style transfer, and digital art.

- Creative control – Conditional GANs enable attribute control (e.g., changing hair color in generated portraits).

- Fast generation – Once trained, GANs generate images in a single forward pass, suitable for real-time applications.

Weaknesses

- Training instability – The adversarial process can fail to converge, leading to unstable results.

- Mode collapse – May generate limited diversity, ignoring parts of the dataset distribution.

- Non-visual limitations – Less effective for sequential data like audio or text compared to other models.

Diffusion Models

Diffusion models represent a newer class of generative models that gained prominence in 2022 with tools like

Stable Diffusion and DALL-E 2.

These models gradually add noise to training data and learn to reverse the process,

denoising step by step until a coherent output emerges.

Strengths

- High fidelity and diversity – Produce crisp, varied images without common GAN artifacts.

- Stable training – Training is generally more robust since no adversarial competition is involved.

- Text-to-image capability – When paired with transformers, diffusion models enable creative tools like text-to-image generation.

Weaknesses

- Slow inference – Image generation requires dozens or even hundreds of denoising steps.

- Computational cost – Both training and inference are resource-intensive, contributing to AI’s energy consumption.

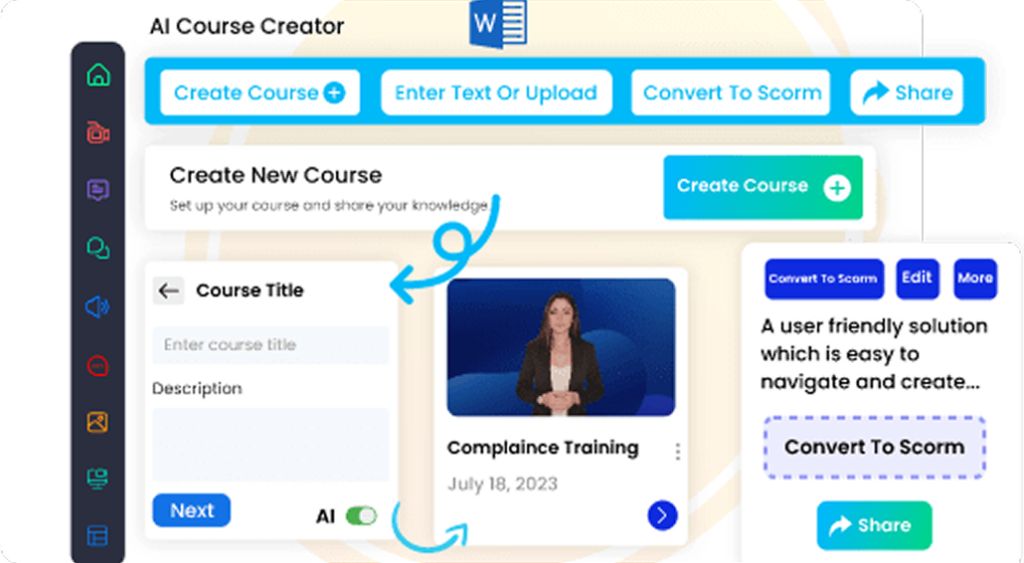

Generative AI Training

Unlock the power of AI-driven creativity. Learn how to leverage Generative AI for content creation, problem-solving, automation, and innovation across industries.

Transformer-Based Language Models

Transformers are the backbone of modern natural language processing. Introduced in 2017,

the transformer architecture encodes tokens and uses self-attention to capture relationships across sequences.

This design powers large language models such as GPT-4, Claude, Gemini, and LLaMA.

How Transformers Generate Text

Training involves predicting the next token given the previous context. With billions of parameters,

transformers learn grammar, facts, and reasoning patterns. During inference,

sampling strategies like temperature, top-p, and top-k help balance creativity and coherence.

Strengths

- Versatility – Excels at text generation, translation, summarization, and Q&A. Extensions include vision and audio tasks.

- Long-range dependencies – Self-attention captures context across entire documents, enabling coherent outputs.

- Scalability – Performance improves with larger datasets and models, though at a cost.

Weaknesses

- Cost and environmental impact – Training requires immense computational resources.

- Hallucinations – Models may produce factually incorrect but convincing text.

- Bias and fairness – Transformers inherit biases present in training data.

Choosing the Right Model

- GANs – Best for fast, photorealistic image generation.

- Diffusion models – Ideal for high-fidelity, diverse, text-to-image tasks.

- Transformers – State-of-the-art for natural language and multimodal generation.

- Specialized models – VAEs or flow-based models may be better for structured or scientific data.

Conclusion

Generative AI is not a single technique but a family of architectures. Understanding the differences between GANs, diffusion models, and transformers helps practitioners select the right tool for their application. With research advancing rapidly, hybrid models that combine these approaches are beginning to emerge.